VxRail 8.0.000 & vSAN Express Storage Architecture (vSAN ESA) - next evolution of vSAN architecture now available for VxRail

VMware's vSAN OSA and vSAN ESA

Since vSAN original storage

architecture (OSA) was launched as a software-defined storage product by

VMware, it has always been a two-tier architecture constructed of 1 - 5 disk

groups - with each disk group then having one tier of dedicated caching devices,

looking after the performance of your cluster, and then your second tier having

a layer of dedicated capacity drives.

With vSphere release 8.0, customers

will now have another option during cluster deployment for their 3-node, 2-node

and stretched VxRail clusters with VMware’s new vSAN Express Storage

Architecture (ESA).

vSAN ESA is a new single-tier architecture where all the drives in your cluster will contribute towards both cache and capacity requirements, with a new log-structured file system that takes advantage of the improved capabilities of modern hardware, driving better performance and space efficiency of your clusters, all the while consuming fewer CPU resources.

How did vSAN ESA come about?

If we look at what has been

happening with hardware and networking over the last ten to twenty years, we have seen

an increased adoption of NVMe-based

disk drives, with potential customers now extending from on-prem to cloud

service providers .

While vSAN OSA has been catering for

‘older’ device types, so think SATA/SAS

(and yes, some newer types too), this approach of covering all bases with old and newer hardware has limited how vSAN could progress in a modern world.

With NVMe all-flash devices shifting

the bottleneck away from storage devices, VMware have been able to re-imagine

their vSAN architecture by taking advantage of these NVMe capabilities, and together

vSAN ESA can process data in an extremely efficient way, with near device-level

performance.

Data Services and vSAN ESA's log-structured

file system (vSAN LFS)

While vSAN ESA builds on the existing vSAN stack, it introduces some new layers to the vSAN process, while also moving data services to the top of the stack.

These data services, assuming they have been enabled, will take place BEFORE the data is processed down through to the storage pool devices. Immediately compressing and encrypting the data at ingestion reduces CPU cycles and network bandwidth usage for vSAN ESA. This is different to vSAN OSA where we see I/O amplification penalties occurring due to data being re-processed between cache and capacity tiers when the data is being moved.

[Note: Compression is enabled as default for vSAN ESA with an opt-in selection being required during cluster deployment for encryption (encryption cannot be disabled later once configured).]

One of the new layers you will see

with vSAN ESA, is a new patented file system called the vSAN log-structured

file system, vSAN LFS for short – vSAN LFS will take this compressed and

encrypted data, ingest it quickly and also prepare it for a very

efficient full stripe write.

The second new layer is the optimised

vSAN LFS Object Manager – this is built around a new high-performance block

engine and key value store with objects being stored in two legs, the performance

leg and the capacity leg. The component data will be organised in the

performance leg prior to an efficient full stripe to the capacity leg – with full

stripe writes being done asynchronously while the FTT is maintained throughout.

This approach allows large write

payloads to be delivered to the storage pool devices quickly and efficiently,

minimising overhead for metadata, and delivering near device-level performance.

Figure 6. vSAN LFS and vSAN LFS Object Manager

Highlights

for vSAN ESA Data Services

- Data Services applied only once at ingestion

- Reduces network payload

- Minimises I/O amplification

- Lowers overall CPU consumption

- Compression

- Enabled by default

- Changed to VM level (for OSA, compression is at cluster-level)

- Encryption

- Enabled during configuration

- Supported encryption

- External Key management Server (KMS) or

- Native Key Provider

- Fast writes to Performance Leg

- Rapid write acknowledgement

- Eliminates read-modify-write overhead

- Efficient full stripe writes to Capacity Leg

- Data organised in performance leg for efficient full stripe writeapid write acknowledgement

- Performed asynchronously

- FTT Maintained in performance and capacity legs

RAID recommendation

Due to the elimination of read-write-modify overhead for erasure coding at ingestion, performance writes are committed much quicker than you would see for vSAN OSA. A RAID-6 configuration can be implemented on your clusters with the same performance that you would see for a RAID-1 configuration, and with better space efficiency. VMware recommends that a RAID-6 configuration is implemented wherever possible, so that you can avail of all the enhanced resilience while maintaining performance levels for your clusters.

vSAN ESA highlights

- Flexible architecture using single tier in vSAN 8

- No disk groups. All devices contribute to performance and capacity

- Reduction in time needed for resynchronisation

- Removes disk group as a failure domain

- Optimised high performance (near device-level)

- All drives in storage pool store metadata, capacity legs and performance legs

- Store data as RAID-6 at the performance of RAID-1

- Performance and capacity balanced across entire datastore

- Supports TLC NVMe drives only

- Ease of Management

- Shares vSAN distributed object manager

- Uses same storage policy management

- No discernable difference in vSphere management

What does this mean for VxRail?

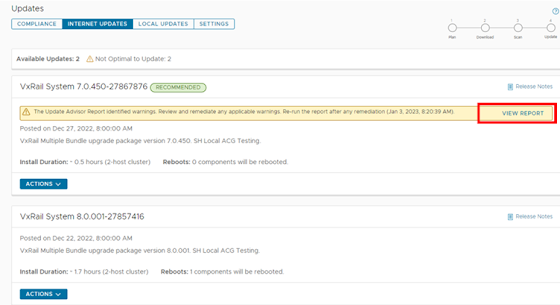

VxRail 8.0.000 was simshipped with vSphere 8.0 at the beginning of the year, with support of vSAN ESA and vSAN OSA in this release.

Customers will have their choice of vSAN architecture for their new VxRail deployments. Whether they opt for vSAN ESA or vSAN OSA will be dependent on customer preference and also the type of VxRail nodes that they are purchasing.

VxRail will have two node types that will support vSAN ESA, starting with our E660N and P670N nodes, which are both all-NVMe nodes.

For existing VxRail clusters, it is expected that vSAN OSA will continue to be supported for long into the future, so customers can keep their existing infrastructure and upgrade to VxRail 8.0.000 without a problem.

For vCenter, a customer-supplied

vCenter can manage both vSAN OSA and vSAN ESA clusters, as long as it has been upgraded

to vCenter 8.0.

Right now, vSAN ESA is supported for greenfield VxRail deployments only.

VxRail Prerequisites/Requirements

- Each supported VxRail node has 24 NVMe-enabled disk drive slots

- A minimum of six NVMe drives per VxRail node

- Cannot mix VxRail P670N andd E660N nodes in the same cluster

- Minimum 32 CPU cores per VxRail node

- Minimum 512 Gb memory capacity per VxRail node

- Customer-managed vCenter (must be at vCenter 8.0) can manage a mix of both vSAN OSA and vSAN ESA clusters

- 25Gbe or 100Gbe OCP/PCIe physical networking

- Existing GPU models supported at initial release

- vSAN ESA is supported with VxRail for greenfield deployment only

- Licensing:

- vSAN ESA requires vSAN Advanced, Enterprise, or Enterprise Plus license edition (note: vSAN data-at-rest encryption requires vSAN Enterprise or Enterprise Plus)

- vSphere version 8 licenses are required for each VxRail node

- A version 8 license is required for vCenter instances supporting a VxRail cluster running vSAN ESA.

We’re

not saying this is a must for VxRail customers right now, keep doing what

you’re doing with your existing vSAN OSA clusters, if you so wish… however if

you DO want to transition to vSAN ESA, then why not start with adopting vSAN ESA with any new all-NVMe VxRail clusters you are deploying - all the while using software that you are already accustomed to, with the added benefits of better performance and space efficiency.

#IWORK4DELL

Opinions expressed in this article are entirely my own and may not be representative of the views of Dell Technologies.

Comments

Post a Comment